Has anyone tried to mix and stream with mimoLive at 2K or 4K resolution? Any issues?

No, but I’d love to hear how you get on.

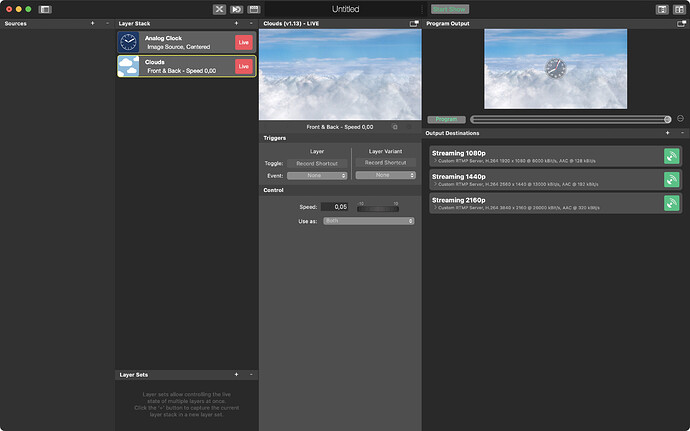

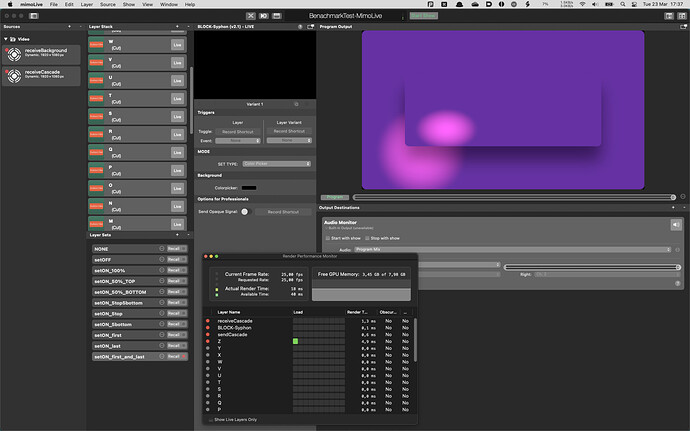

Hello @hutchinson.james_boi. I’m doing some tests to understand the feasibility of streaming with resolutions higher than 1080p. My first test is based on the following file (clouds and a clock).

I made a script to collect data on CPU and memory usage of mimoLive. Here are the results collected while streaming the above file in 2160p, 1440p, and 1080p. I don’t see a huge difference, but I guess that difference should increase if you have a number of 2160p or 1440p sources.

| Streaming 2160p | |||

| Date | AppCPU (%) | AppRAM | TotalCPU (%) |

| Mar 21 19:51:20 | 13.80 | 634.00 | 4.80 |

| Mar 21 19:51:23 | 13.70 | 634.00 | 3.81 |

| Mar 21 19:51:25 | 13.60 | 634.00 | 3.71 |

| Mar 21 19:51:28 | 14.10 | 602.00 | 3.70 |

| Mar 21 19:51:31 | 13.60 | 602.00 | 3.39 |

| Mar 21 19:51:33 | 13.80 | 603.00 | 4.50 |

| Mar 21 19:51:36 | 14.00 | 603.00 | 3.37 |

| Mar 21 19:51:39 | 13.70 | 602.00 | 3.44 |

| Mar 21 19:51:41 | 14.30 | 603.00 | 6.40 |

| Mar 21 19:51:44 | 13.80 | 603.00 | 3.34 |

| Mar 21 19:51:47 | 13.50 | 602.00 | 3.23 |

| Mar 21 19:51:49 | 13.70 | 604.00 | 4.21 |

| Mar 21 19:51:52 | 13.60 | 603.00 | 3.87 |

| Mar 21 19:51:55 | 16.00 | 602.00 | 3.84 |

| Mar 21 19:51:57 | 14.00 | 603.00 | 3.82 |

| Average | 13.95 | 608.93 | 3.96 |

| Streaming 1440p | |||

| Date | AppCPU (%) | AppRAM | TotalCPU (%) |

| Mar 21 19:53:11 | 12.70 | 585.00 | 3.75 |

| Mar 21 19:53:14 | 13.00 | 584.00 | 3.80 |

| Mar 21 19:53:17 | 12.10 | 585.00 | 3.90 |

| Mar 21 19:53:19 | 12.40 | 585.00 | 3.70 |

| Mar 21 19:53:22 | 12.10 | 584.00 | 3.90 |

| Mar 21 19:53:25 | 12.10 | 585.00 | 3.20 |

| Mar 21 19:53:27 | 12.30 | 585.00 | 3.35 |

| Mar 21 19:53:30 | 12.00 | 585.00 | 2.81 |

| Mar 21 19:53:33 | 12.10 | 585.00 | 2.81 |

| Mar 21 19:53:35 | 12.20 | 586.00 | 2.98 |

| Mar 21 19:53:38 | 12.30 | 585.00 | 2.93 |

| Mar 21 19:53:41 | 12.30 | 585.00 | 4.26 |

| Mar 21 19:53:43 | 12.30 | 586.00 | 2.86 |

| Mar 21 19:53:46 | 11.90 | 585.00 | 3.90 |

| Mar 21 19:53:49 | 12.00 | 585.00 | 2.70 |

| Average | 12.25 | 585 | 3.39 |

| Streaming 1080p | |||

| Date | AppCPU (%) | AppRAM | TotalCPU (%) |

| Mar 21 19:56:37 | 12.00 | 592.00 | 3.20 |

| Mar 21 19:56:40 | 12.00 | 591.00 | 3.69 |

| Mar 21 19:56:42 | 11.90 | 592.00 | 2.98 |

| Mar 21 19:56:45 | 12.20 | 592.00 | 3.30 |

| Mar 21 19:56:48 | 11.90 | 591.00 | 2.87 |

| Mar 21 19:56:50 | 11.90 | 592.00 | 3.30 |

| Mar 21 19:56:53 | 12.20 | 592.00 | 2.66 |

| Mar 21 19:56:56 | 11.80 | 592.00 | 2.86 |

| Mar 21 19:56:58 | 12.00 | 592.00 | 2.86 |

| Mar 21 19:57:01 | 12.00 | 592.00 | 2.88 |

| Mar 21 19:57:04 | 11.80 | 592.00 | 2.76 |

| Mar 21 19:57:06 | 12.00 | 592.00 | 2.92 |

| Mar 21 19:57:09 | 12.40 | 592.00 | 2.81 |

| Mar 21 19:57:12 | 12.40 | 592.00 | 5.94 |

| Mar 21 19:57:14 | 12.50 | 592.00 | 3.53 |

| Average | 12.07 | 591.87 | 3.24 |

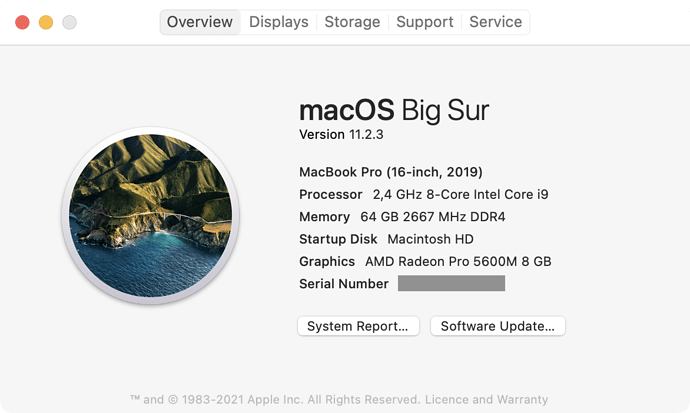

The machine used is a MacBook Pro (16-inch, 2019) 2,4 GHz 8-Core Intel Core i9.

Please think also about the Source Monitor and the Render Monitor. (available ms).

I only tried to capture YT uhd with BMD DeckLink HDMI capture. Worked fine (M1mbp16gb) for the test. It was simply to test the 4k/uhd input of the card. It was not a serious test for streaming capabilities.

Hi @JoPhi, I will keep an eye on the source and render monitors. Anyway, I don’t think I would use 1440p or 2160p for sources. In many online events, split screen is the preferred choice to show multiple participants at the same time. Soon (if not already now), we will be able to get 1080p @ 60fps thru WebRTC (mimoCall). That means that, in a 2x2 split screen, we will have 4K. My tests are intended to preserve such a resolution, without compromising the entire mixing/streaming process stability.

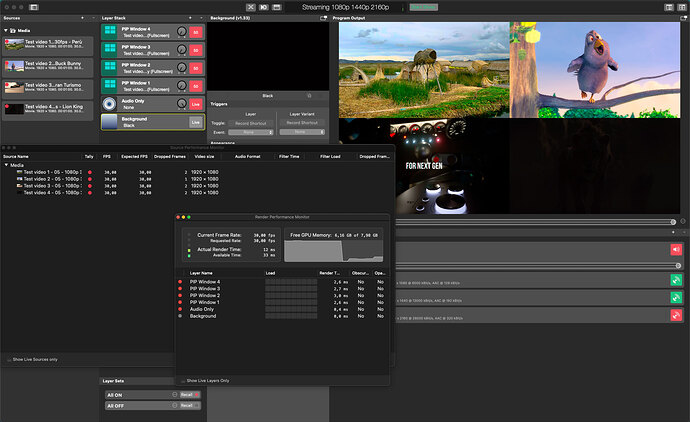

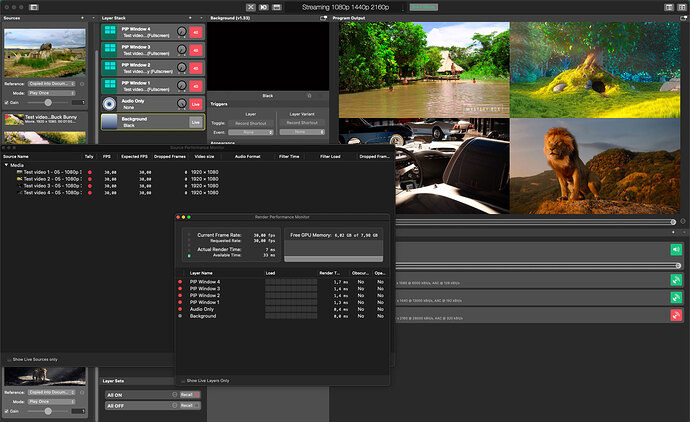

I did another couple of tests, using four 1080p 30fps videos as sources and 2160p 30 fps as streaming output. Here there are the results.

Sources encoded in H.264

| Date | AppCPU (%) | AppRAM | TotalCPU (%) |

|---|---|---|---|

| Mar 23 13:44:44 | 67.00 | 4671.00 | 9.71 |

| Mar 23 13:44:47 | 67.50 | 4673.00 | 10.28 |

| Mar 23 13:44:50 | 63.80 | 4669.00 | 8.66 |

| Mar 23 13:44:54 | 60.70 | 4671.00 | 9.64 |

| Mar 23 13:44:57 | 62.50 | 4640.00 | 8.10 |

| Mar 23 13:45:00 | 58.80 | 4640.00 | 8.50 |

| Mar 23 13:45:03 | 67.20 | 4656.00 | 8.76 |

| Mar 23 13:45:06 | 61.90 | 4637.00 | 8.21 |

| Mar 23 13:45:10 | 63.20 | 4608.00 | 8.67 |

| Mar 23 13:45:13 | 60.30 | 4607.00 | 7.98 |

| Mar 23 13:45:16 | 60.80 | 4606.00 | 7.76 |

| Mar 23 13:45:19 | 62.00 | 4608.00 | 8.11 |

| Mar 23 13:45:23 | 61.40 | 4597.00 | 7.81 |

| Mar 23 13:45:26 | 76.60 | 4598.00 | 37.65 |

| Mar 23 13:45:29 | 62.90 | 4646.00 | 8.84 |

| Average | 63.77 | 4635.13 | 10.58 |

Sources encoded in ProRes

| Date | AppCPU (%) | AppRAM | TotalCPU (%) |

|---|---|---|---|

| Mar 23 13:47:12 | 60.60 | 4957.00 | 23.42 |

| Mar 23 13:47:15 | 58.50 | 4924.00 | 23.70 |

| Mar 23 13:47:18 | 53.40 | 4942.00 | 21.64 |

| Mar 23 13:47:21 | 56.50 | 4931.00 | 24.70 |

| Mar 23 13:47:24 | 53.90 | 4955.00 | 21.88 |

| Mar 23 13:47:27 | 55.20 | 4938.00 | 25.85 |

| Mar 23 13:47:30 | 56.60 | 4924.00 | 20.52 |

| Mar 23 13:47:33 | 51.50 | 4936.00 | 21.47 |

| Mar 23 13:47:36 | 49.60 | 4932.00 | 21.46 |

| Mar 23 13:47:39 | 49.10 | 4945.00 | 20.26 |

| Mar 23 13:47:42 | 52.40 | 4956.00 | 21.90 |

| Mar 23 13:47:45 | 52.50 | 4950.00 | 21.53 |

| Mar 23 13:47:48 | 54.80 | 4940.00 | 22.10 |

| Mar 23 13:47:51 | 54.20 | 4942.00 | 21.94 |

| Mar 23 13:47:55 | 54.10 | 4932.00 | 21.58 |

| Average | 54.19 | 4940.27 | 22.26 |

It looks like ProRes files require much more CPU power than H.264 ones.

Yes, seems to be. On macPro2013 it’s different. h264 is encoded by a software encoder/decoder, so it’s heavy on CPU load.

Could you please do me a favour?

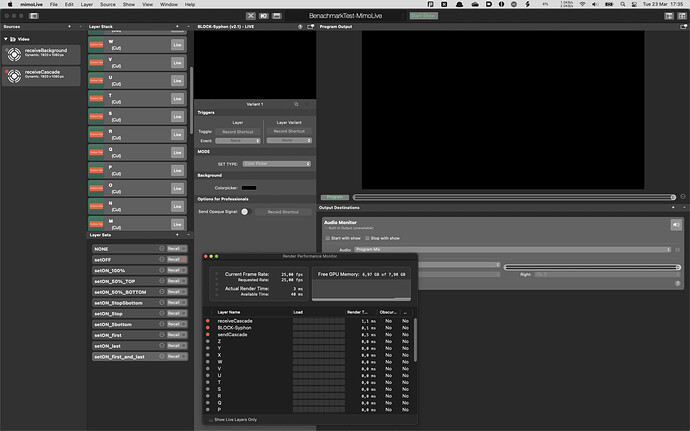

This file is a mimoLive Benchmark-Test: https://drive.google.com/file/d/1VQDn7isn62j_2RpbMoeOXm7dhRvNBml_/view?usp=sharing

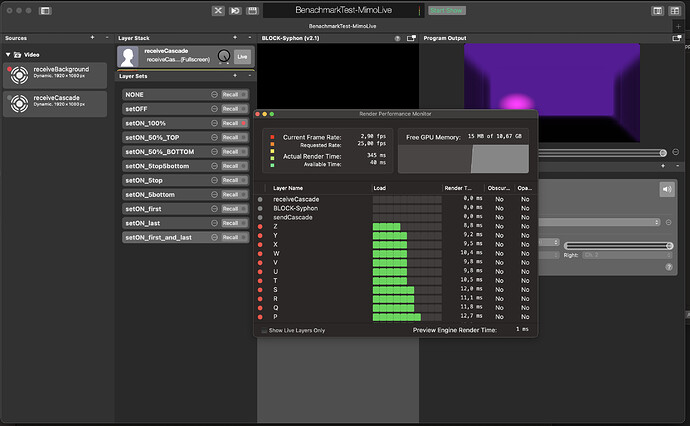

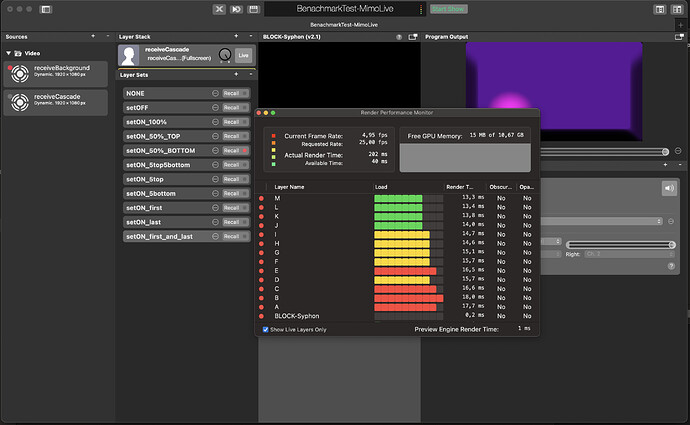

Could you please start every Set once, wait for 10 seconds, and see at the Render Monitor, what values are being shown at a. Current Frame Rate and b. Actual Render Time?

NONE

setOFF

setON_100%

setON_50%_TOP

setON_50%_BOTTOM

setON_5top5bottom

setON_5top

setON_5bottom

setON_first

setON_last

setON_first_and_last

When we find a way to conntect your experiences with this type of Benchmark Test, we’re maybe finding a way to have a meaningful Benchmark-Test, which can predict use-cases. <3

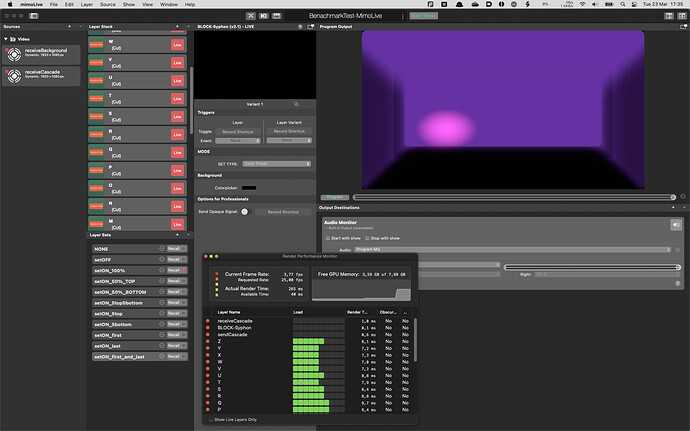

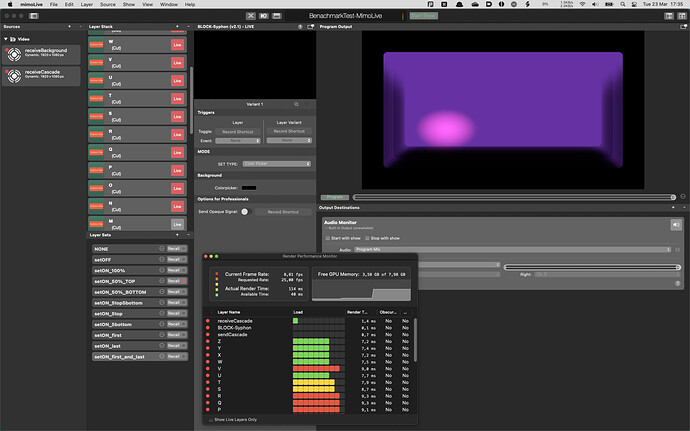

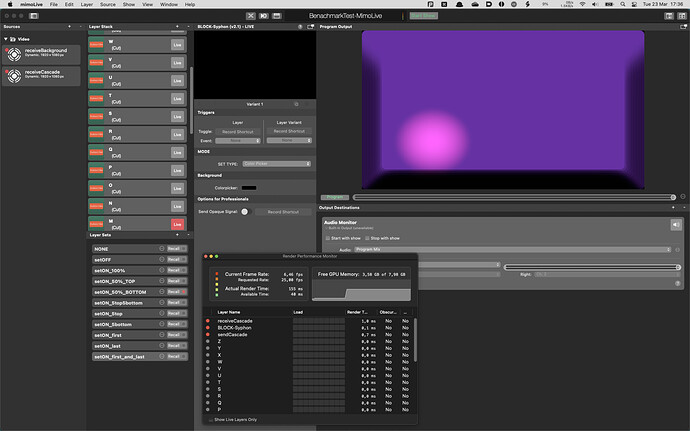

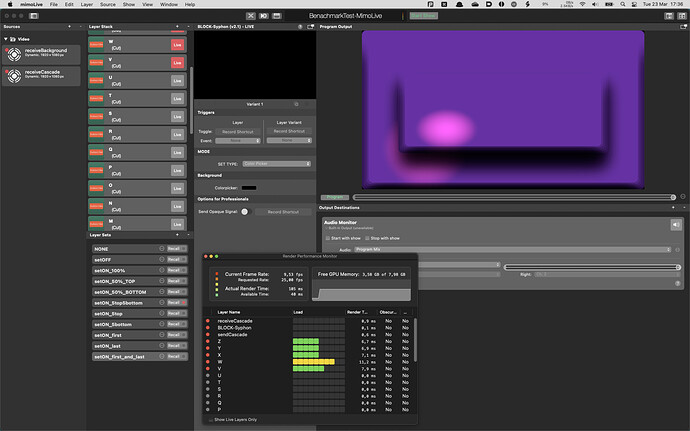

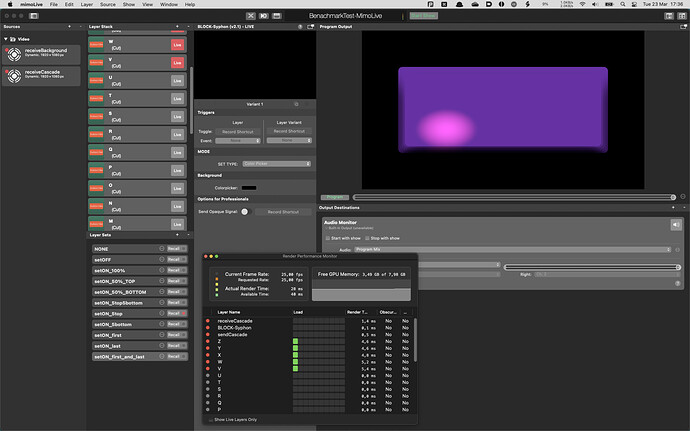

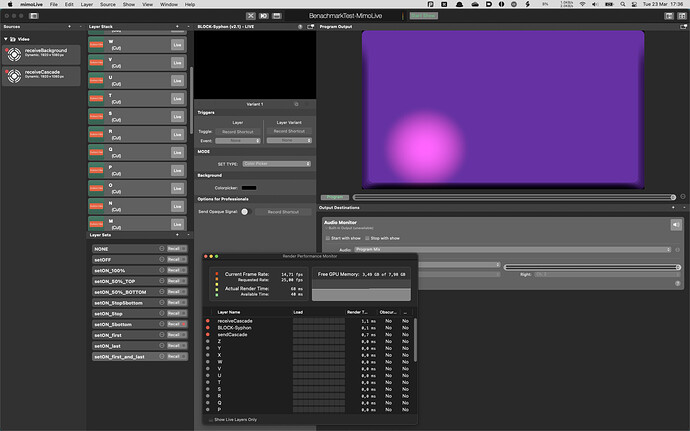

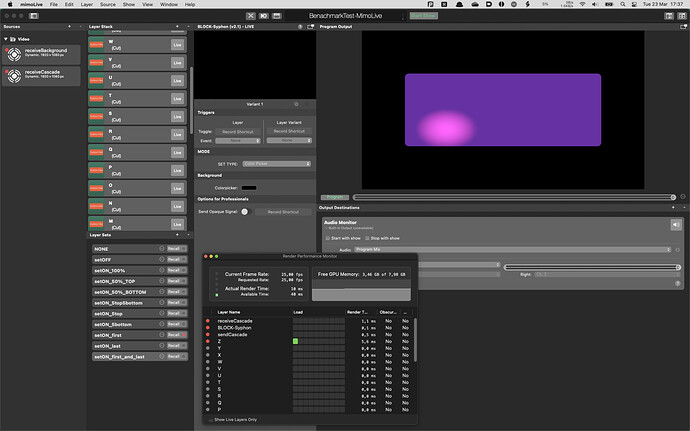

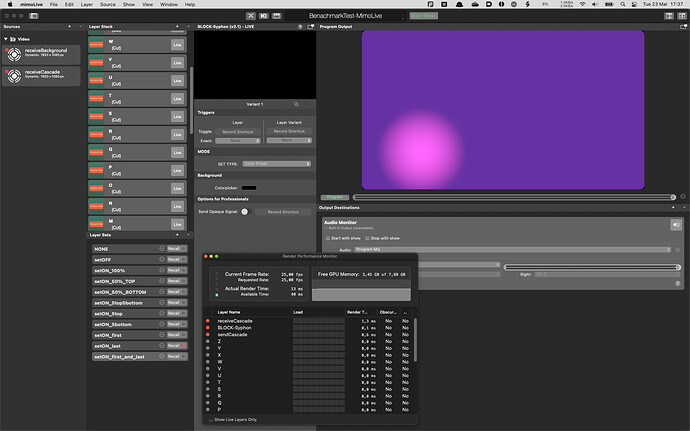

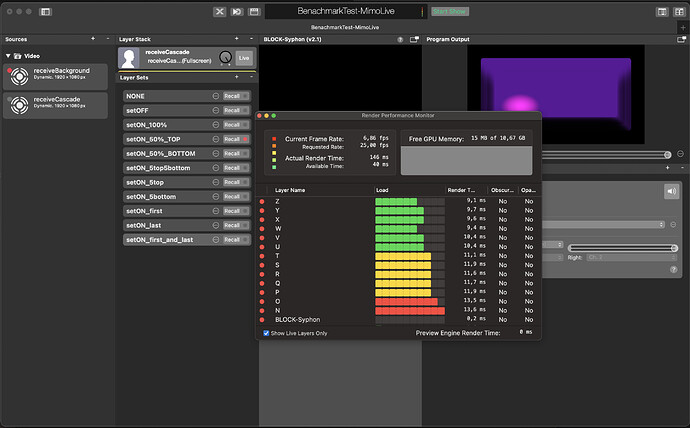

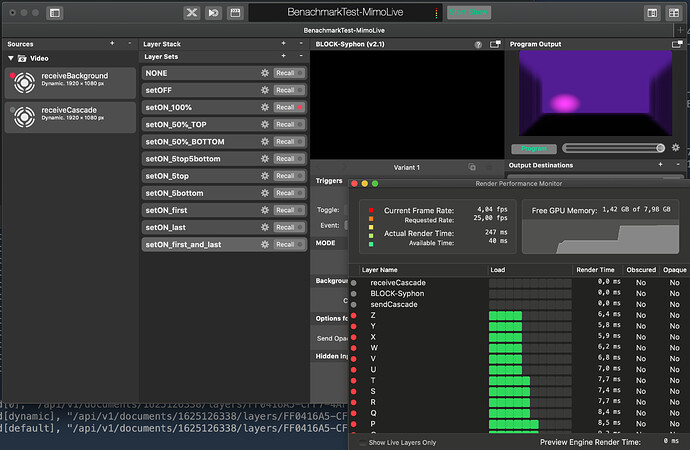

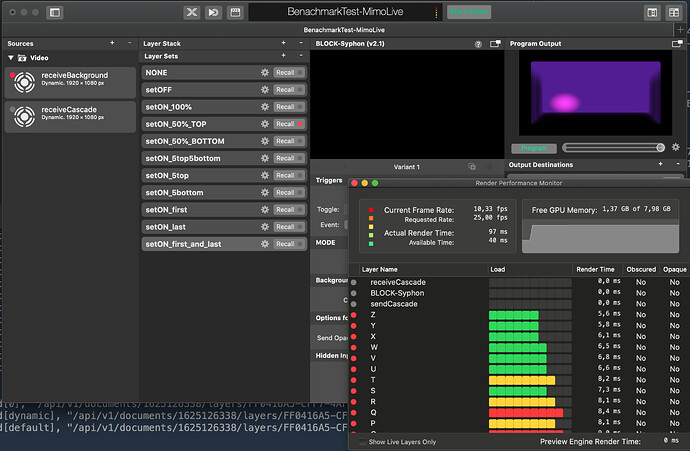

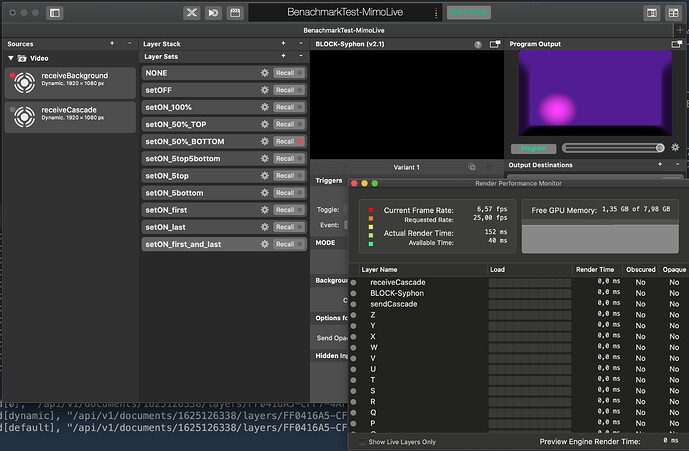

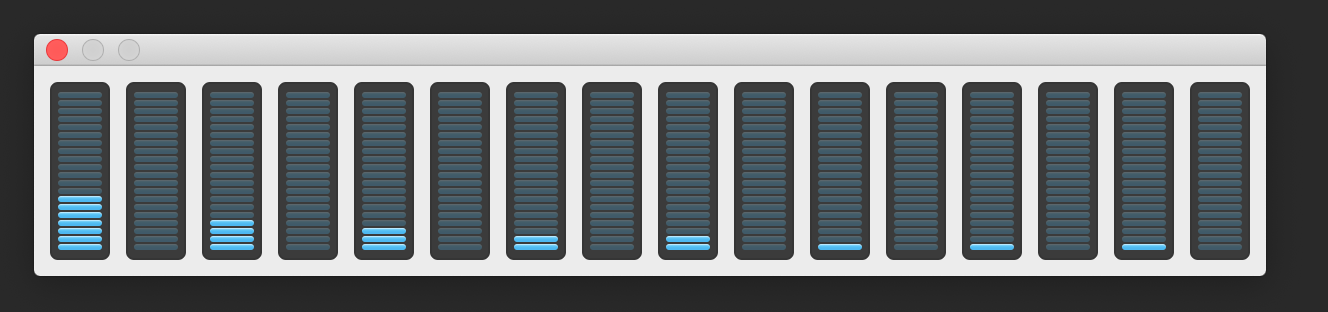

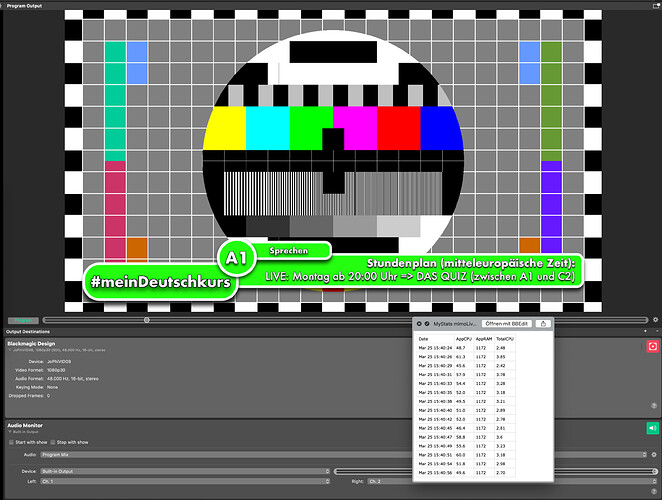

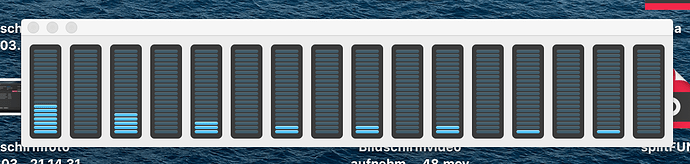

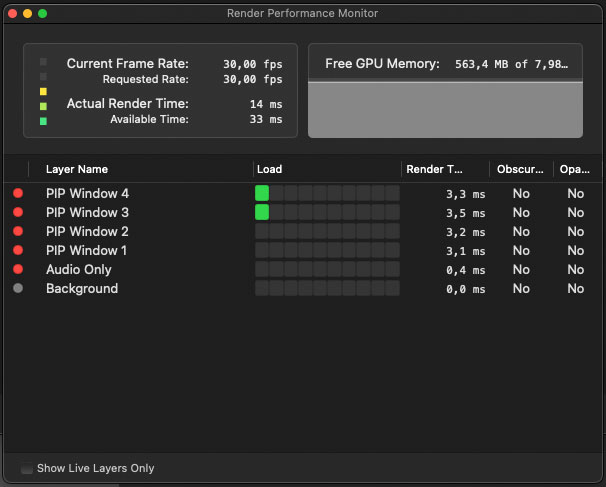

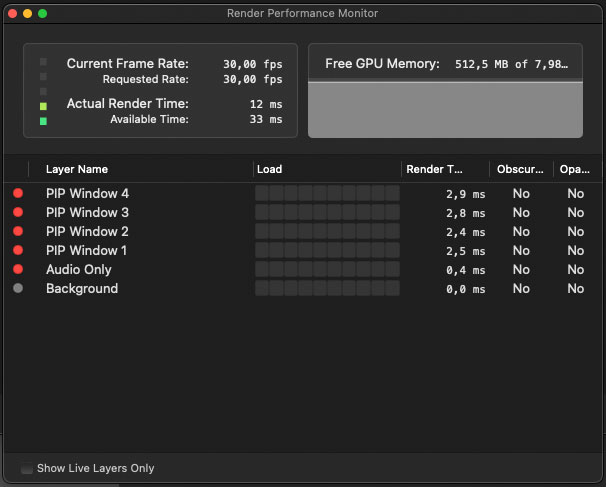

Hello @JoPhi I’m not sure I understood your request correctly. Anyway, please find below the screenshots I took 10 seconds after activating each of the layer sets.

Perfect!! Thank you so much, @Gabriele_LS !! This helps me a lot!! It was for MacBook Pro (16-inch, 2019) 2,4 GHz 8-Core Intel Core i9 with Radeon Pro 5500M?

@JoPhi Please find below my Mac specs. May I ask you what the benchmarks above tell you? I mean: are they related in some way to streaming in 1440p / 2160p?

Thx for asking, @Gabriele_LS !

The benchmark outperforms an instance of mimoLive in some special ways and shows the bottle neck of the system.

Current Frame Rate (setON_??: the higher the better)

Actual Render Time (setON_??: the lower the better)

It’s a mixture between vector and pixel calculations. The idea is to compare several Macs and (even) several Versions of mimoLive and archetectures:

M1 MacBookPro 16GB (rosetta translated) on battary:

The goal is to find figures which can be connected to real world performance, to say: Your/This Template is fully compatible with your setup.

Another Benchmark: (Hackintosh, price point: about 2.000 Euro before 8 months, DeckLink Quad 2 is on PCIe internally)

Thanks for sharing. Apparently, my MBP is very close to the Hackintosh. Have you also benchmarked a recent iMac 27" or an iMac Pro or a MacPro (just to have a reference)?

P.S. Are you in the mimoLive dev team? Or just a die-hard fan of it?

@Gabriele_LS ,  die-hard fan.

die-hard fan.  … A pity: I do not have figures of other configurations, simply these, we both can create. Who knows, maybe @hutchinson.james_boi could help out.

… A pity: I do not have figures of other configurations, simply these, we both can create. Who knows, maybe @hutchinson.james_boi could help out.

@Oliver_Boinx also did some tests on his devices. So there are several results of further devices at the labs of Boinx available.

- Yes, it seems to be very close, but the difference of only 1fps-point can mean whole worlds in certain cases.

- Yes, it seems to be very close, but the difference of only 1fps-point can mean whole worlds in certain cases.

I expect that the rosetta-translation costs 30-40% of the real performance. I cannot imagine that rosetta is able to share the memory between GPU and RAM. I have the feeling that this copy-process is still present with rosetta2. I cant wait for the first native beta to compare it!!.

I made a couple of tests to compare the load of outputting 2160p NDI vs the load of streaming 2160p to YouTube.

⠀

Outputting to NDI

| Date | AppCPU | AppRAM | TotalCPU |

|---|---|---|---|

| Mar 25 11:41:53 | 122.40 | 1417.00 | 14.92 |

| Mar 25 11:41:56 | 124.90 | 1413.00 | 12.74 |

| Mar 25 11:41:58 | 111.20 | 1415.00 | 12.60 |

| Mar 25 11:42:01 | 112.90 | 1414.00 | 12.40 |

| Mar 25 11:42:04 | 112.70 | 1416.00 | 12.29 |

| Mar 25 11:42:06 | 111.80 | 1413.00 | 17.52 |

| Mar 25 11:42:09 | 108.50 | 1414.00 | 11.45 |

| Mar 25 11:42:12 | 123.00 | 1415.00 | 12.60 |

| Mar 25 11:42:14 | 111.10 | 1413.00 | 11.81 |

| Mar 25 11:42:17 | 109.80 | 1415.00 | 13.99 |

| Mar 25 11:42:19 | 114.60 | 1415.00 | 26.74 |

| Mar 25 11:42:22 | 113.80 | 1414.00 | 14.52 |

| Mar 25 11:42:25 | 114.10 | 1415.00 | 12.10 |

| Mar 25 11:42:27 | 113.70 | 1415.00 | 11.98 |

| Mar 25 11:42:30 | 116.60 | 1415.00 | 12.37 |

| Average | 114.74 | 1414.60 | 14.00 |

⠀

Streaming to YouTube

| Date | AppCPU | AppRAM | TotalCPU |

|---|---|---|---|

| Mar 25 11:42:53 | 71.50 | 1532.00 | 9.30 |

| Mar 25 11:42:56 | 74.40 | 1528.00 | 9.54 |

| Mar 25 11:42:58 | 71.80 | 1531.00 | 13.48 |

| Mar 25 11:43:01 | 67.20 | 1530.00 | 8.54 |

| Mar 25 11:43:04 | 77.00 | 1532.00 | 11.90 |

| Mar 25 11:43:07 | 64.00 | 1497.00 | 8.17 |

| Mar 25 11:43:10 | 71.00 | 1498.00 | 8.43 |

| Mar 25 11:43:12 | 68.10 | 1499.00 | 8.45 |

| Mar 25 11:43:15 | 65.00 | 1496.00 | 8.51 |

| Mar 25 11:43:18 | 69.60 | 1499.00 | 8.25 |

| Mar 25 11:43:21 | 67.20 | 1498.00 | 8.90 |

| Mar 25 11:43:23 | 67.60 | 1496.00 | 8.22 |

| Mar 25 11:43:26 | 71.70 | 1499.00 | 8.25 |

| Mar 25 11:43:29 | 69.30 | 1497.00 | 8.12 |

| Mar 25 11:43:32 | 66.20 | 1499.00 | 8.37 |

| Average | 69.44 | 1508.73 | 9.10 |

⠀

Apparently, NDI encoding is pretty heavy for mimoLive.

I wonder what would be the right way to split the load of a 4K production between two Macs. I’d like to use one Mac to handle the video mixing, and another Mac to handle the addition of graphics and the streaming. But how can I send the output of the first Mac to the second Mac? Maybe with a Blackmagic DeckLink Mini Monitor 4K connected to the first Mac, and a Blackmagic DeckLink Mini Recorder 4K connected to the second Mac?

That is a good question. The Sources-Monitor should be included in these measurements. Currently there is no combined Output-Monitoring available but at some destinations you can see a tiny graph. But: Even the monitoring uses performance. *hmmm…

What I know is that when I turn on all measurements while creating my show, the show itself works fine afterwards (when no measurements are running.)

I see these measurements as a security-buffer for performance.

I see these measurements as a security-buffer for performance.

I had a 1080p25 Setup on macPro2013 with 8 Cams in and two outs (yes, no joke!), which worked fine (because I avoided heavy a. picture movements and b. streaming from the same device).

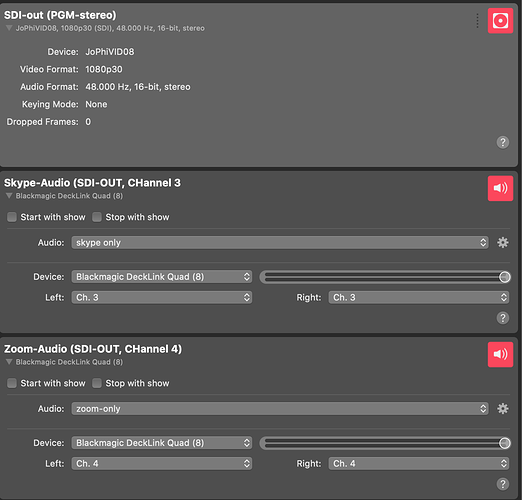

It was not possible to stream in h264 with this load without fragments, but it was easily able to create a second SDI output in 1080p25, which was a bit different to the PGM and done for the skype/zoom-in, which was handled by another mac those days.) Audio was split afterwards on the other mac. On macPro2013 I simply changed the ins of skype/zoom. (done with the help of BlackHole). It’s a pity that mimoLive does not accept only left or only right channel. You have to use ch1 and ch1 or ch2 and ch2. There is no “none” option. This would make routing of audio easier.

But back to the topic:

With a BMD-Destination you can define 2 audio-channels. Use Audio-Sources to send Audio to other channels. 3,4,5,6,7,… So you can submit several Audio-Channels/mixes to special other channels on another computer. Here an example:

With this technique, you can not only submit more than 2 channels over SDI to another device, you could also record all audio-tracks separately to recombine Audio in post. channel 1-2 => original-Mix 3 to 16: including all original sources in “mono” (and/or) stereo. Since years I plan a Sitcom which could be recorded like this.  - Use at the recorder a codec, that allows multiple channels!!

- Use at the recorder a codec, that allows multiple channels!!

The same at the sources:

Use BMD-Source to read Channels 1 and 2 and “Audio Only” to read Channels 3 to 16.

Thanks for the detailed explanation.

I think I’m fine with 2 audio channels because I’d like to use the second machine just for adding graphics, encoding, recording, and streaming.

Are you using the Blackmagic DeckLink 8K Pro?

I would love to understand what’s the CPU consumption of outputting the program a/v using that card compared to outputting NDI or streaming to YouTube.

If you like to use it, here is the script I wrote to track the CPU usage of a single app: https://we.tl/t-Upv1lDIdE3. It runs for about one minute, and it saves results in your home directory, in a .csv file.

No, it’s a Quad 2. (max 1080p60, when placed into the Hackintosh, or used with TB3. With the sonnet-Box the performance is a bit reduced, especially when using TB2 (!! that’s why not to many movements!! :D), but with some tricks possible.)

Thank you very much for sharing your script, I’ll try it later when sitting in front of it.

It is so funny: SDI-out for live-streaming on an other device was for macPro2013 less performance intensive than h264-streaming to facebook. OK, there is no hardware-encoding available. I’m not sure, but maybe the GPU content is flushed somehow through the SDI channel. Have you ever tried to send 720p (from a 1080p project) through a BMD-SDI-desination? This phenomenon supports this theorem.

Thank you so much for the script. It’s amazing and Eye-opening!

While processing mimoLive:

Result:

While Script

Fantastic, so I have a new way to give the system a bit stress. <3

@Gabriele_LS , does mimoLive use threads? I newer saw CPU-load on a thread.

@JoPhi The script collects data about mimoLive (or any other app) usage in different conditions. You should first create the conditions you want to measure (i.e. mimoLive streaming to YouTube), then launch the script and wait about 1 minute. When you receive the alert, you can check the results in your home folder. Of course, you can launch the script in different conditions, and it will create a new file each time, so you can then compare the resources used in the various scenarios.